如何加速构建Docker镜像

In this guide, we will explain what you can do to speed up building Docker images.

In general, there are three aspects that significantly affect building and working with Docker:

- Understanding Docker layers and structuring the Dockerfile to maximize their efficiency

- Reducing the weight of the Docker image

- Automating building images with pipelines

Below we break down each of them into a separate paragraph with some essential info that will help you deliver well-optimized lightweight images and use them in your delivery process.

Actions used in this guide:

Docker layers

How layers affect building speed

The way Docker layers are cached has a serious impact on the building speed. When building the layers, Docker will use their cached version for as long as nothing has changed. By "changed" we mean adding a new line to the Dockerfile, or modifying an existing one. However, there are two exceptions:

If we use the

ADDorCOPYinstruction to add a file, e.g.ADD package.json /path/package.json, the build process will calculate the checksum of the added file and compare it with the previous version. The result will define whether to use the cache or not.If we use

ADDorCOPYto add a directory, the layer will always be built from scratch.

How to write Dockerfile layers to optimize building speed

Here's a couple of the most essential tips that will help you build and utilize Docker images much faster:

- Add only necessary things. This may sound obvious, but every new line means another layer = the image grows bigger in size.

- The most frequently changed layers should always be placed at the bottom of the Dockerfile

- If some layers in your Dockerfile depend on files added in higher layers, those files should be added individually

A good example is fetching dependencies, such as npm or Composer. If we write the Dockerfile like this

WORKDIR /app

COPY . /app

RUN npm install

the dependencies will always be fetched every time the Dockerfile is built.

However, if we write it like this

WORKDIR /app

COPY package.json /app

RUN npm install --prod

COPY . /app

the dependencies will only be fetched if the content of package.json file has changed.

- Pay attention to the number of layers and think of merging them where available. The more layers you have, the more time it'll take to build the image and run the container. Even if the image is finally built from cached layers, calculating the checksum also takes time.

For example, it’s better to run

RUN apt-get update -y \

apt-get install -y git”

than

RUN apt-get update -y

RUN apt-get install -y git

Whenever you feel an urge to break something into separate layers, think how it could affect your cache optimization.

Docker Image Size

Unlike other aspects of life, in case of Docker size matters. The most basic thing is working with Docker registries. The bigger the image, the longer it will take to push and pull the image to and from the registry. Also, if you're automating the process with CI/CD, this may significantly slow down our delivery process.

How to write the Dockerfile to make the image smaller

- Use lightweight images in

FROM. For example, if you use Node, you can find lighter versions of the image in Node's tags on Docker Hub – just enter "slim" as a part of the tag's name. It's also worth using the Alpine Linux distribution, as it's much lighter than other distributions, such as Ubuntu or Debian.

A good practice is to check how the images defined in FROM are structured (usually, there's a link to the Dockerfile in the image's details on Docker Hub). This way you'll know what exactly is installed in the image that you're using which can be super important when it comes to security.

Install only the most essential things in the image.

Use .dockerignore to make sure you're only adding files that you need.

The target image should not contain things required by the build, but redundant when launching the image. For example, you can use Maven to build a Java app, but once the application is built, we only need the WAR file in the container. This can be handled in two ways:

- build the application first and then build the image in the context of the created artifacts. In this case, your delivery pipeline will have two stages: one that builds and tests the application, and one that builds the image from the Dockerfile.

- use a multistage build that will build the app one in one image, and use the artifact in the next one. When using this option, remember about Docker layers and cache!

Docker Delivery Pipeline

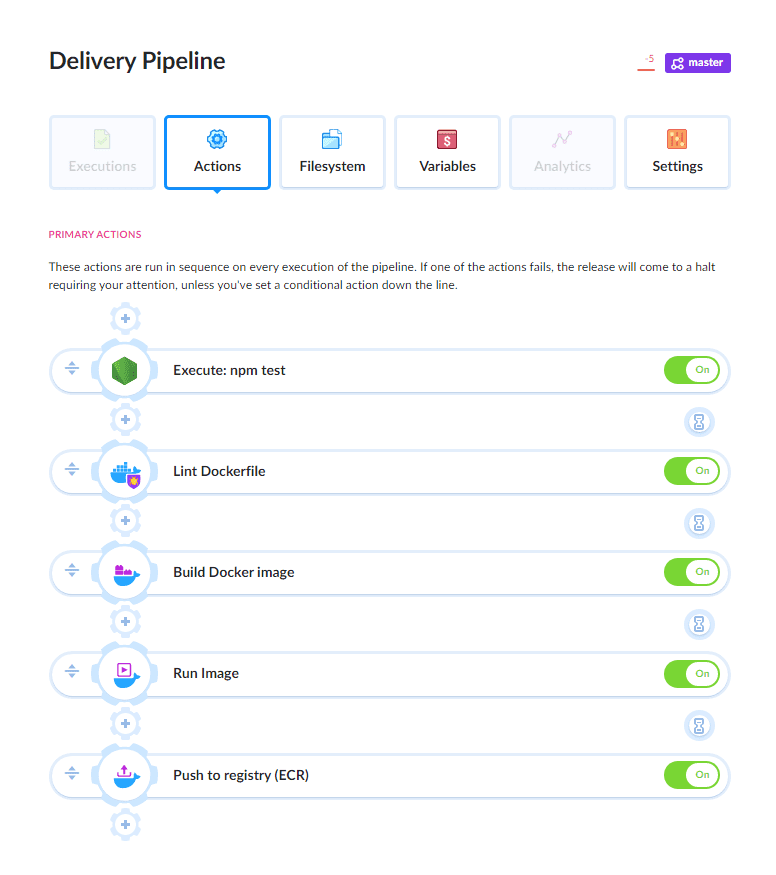

When introducing Docker to CI/CD, first we should check if the tool that we use supports layer caching. Unfortunately, it's not industry standard yet and a lot of SaaS tools do not have that option. The second thing is to optimize the delivery pipeline. Here's a workflow for a properly configured pipeline:

- Test the application.

- Check the semantics of the Dockefile, i.e. look for errors that may, for example, make our image behave differently after every build despite no changes to the Dockerfile

- Build the Docker image from the Dockerfile

- Test if the image runs properly

- Push the image to a Docker registry

- Run the image on the server, for example on a K8s cluster or cloud services, such as AWS Fargate or Google Cloud Run.

The pipeline above was built in Buddy, a CI/CD service will full support for Docker (including and layer caching). If you'd like to learn more, here are detailed instructions on building Docker delivery pipelines in Buddy.